Project: Depth from Defocus

Overview

My goal in this project was to implement a method to obtain the depth of a scene given several images of varying focus. This is accomplished by the realization that the blurriness of out of focus objects gives hints to their depth. This problem is known to be ill-posed [1]. Most solutions to the problem involve tradeoffs between efficiency, noise, precision, or any other number of variables.

I have chosen to implement the method described in [2]. The paper describes the process in detail and others [3] have used the technique to achieve reasonable estimations of depth. The process involves a spatial domain convolution/deconvolution and makes the assumption that the scene is planer with respect to the focal plane. This method requires only two images in order to estimate a solution and the camera parameters can be varied by focus plane depth of aperture size.

All project files can be downloaded.

This is a brief overview of the derivation of the depth from defocus solution. A more detailed derivation is found in the paper.

When an image is focused in front or behind the image plane, a blur circle is formed on the image plane [2][4][5]. This blur circle is scaled by a factor according to the depth of the point causing the circle. Given the lens equation, the radius of of the blur circle can be calculated as follows:

![R = s \frac{D}{2}[\frac{1}{f} - \frac{1}{u} - \frac{1}{s}]](equations/1R.png)

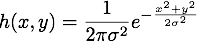

Given that blur circle appears as a circular gradient of decreasing brightness on the image plane, a gaussian point spread function can be estimated:

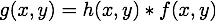

Using this PSF and the focused real world image, a defocused image can be obtained by convolving the focused image with the PSF:

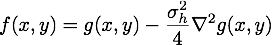

Then, using a spatial domain transform and manipulations in polar space, a deconvolution filter using the laplacian of the image and the standard deviation of the PSF is obtained:

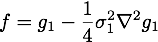

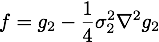

Finally, given two images of different focus, we can begin solving for the standard deviation of the PSF (essentially the blur radius):

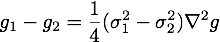

Then, in order to solve for the radius in the presence of noise, the two equations are equated and the laplacian replaced with the mean of both laplacians:

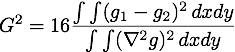

Squaring and summing in a small region gives rise to the following integral:

The root is:

The sign is ambiguous due to squaring of previous terms. This is resolved by estimating the sign using the gray-scale variance of the two images. The variance corresponds to the 'blurriness' of the images.

Finally, using a relation between the two standard deviations involving the two camera parameters, a solution for the second image's blur is found based on the previous integral and camera parameters:

My implementation is very similar to the one described in the [2]. A minor difference lies in the final estimation of the blur radius. Since the original focus of the method was with accurate estimation of the depth of a planer object for auto-focusing, the radius was taken to be the mode of a filtered histogram over the entire image. I am interested in obtaining a depth map over the entire image, so I did not perform the histogram filtering. Instead, in order to be robust in noise, a median filter is applied to the resulting blur estimation. This removes outliers caused by noise.

A note on the filters used in the implementation. The entire premise of [2] is the treating of the image as a cubic polynomial. The recommended filters in this case are based on Chebyshev polynomials and are described in [6].

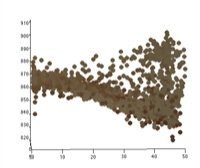

In general, my implementation cannot be used to extract reasonable depth maps for most objects. This may be in part to the equifocal assumption present in this method. Nonetheless, while the depth maps are too flat to match the actual depth, the depth is generally close to the measured focus planes from the images. The measured depth is often several centimeters shorter than the actual depth from the camera. It should be noted the the authors of the paper described a similar occurrence, which they attributed to improper design in the camera. For the purposes of consistency, I shall do likewise.

All images were processed at 300x300 with a filter size of 16, which results in a kernel size of 33 pixels. For most of my tests, I found the bluriness estimate to be less than convincing. Only in the synthetic test did it result in an estimation that clearly corresponded to the image blur. It is possible that image noise or the lack of magnification normalization causes this issue. Clicking on an image will show a full-size version

Synthetic test

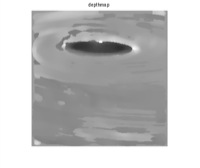

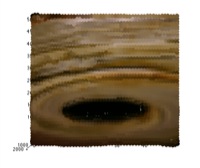

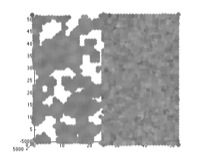

This constructed image with two planer surfaces. The right half of the image is blurred to give the impression that it is closer to the camera. Note that the camera parameters are not valid, and thus the depth is not either (only the shape).

While the far plane suffers from a large amount of noise in the depth map, the shape is preserved. It should be noted that the blurriness estimate is very accurate, far more so than any of the other images. The holes in the 3d image are caused by points being removed by the noise filter.

Close image |

Far image |

|

Close laplacian |

Far laplacian |

|

| Bluriness Black = in image1 White = in image2 |

|

|

Integral solution |

Root of integral |

|

Blur radius |

Depth map |

|

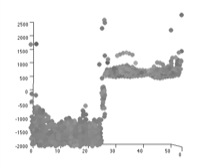

3d top |

3d side |

Heart sheet

Small vase

[1] H. Jin and P. Favaro, A variational approach to shape from defocus, Proc. IEEE European Conference on Computer Vision, 2002

[2] Subbarao, M. and Surya, G., Depth From Defocus: A Spatial Domain Approach,IJCV, 1994

[3] Kyuman J., et al., Digital shallow depth-of-field adapter for photographs, The Visual Computer: International Journal of Computer Graphics, 2008

[4] A. Pentland, A new sense for depth of field, IEEE Trans. Pattern Anal. Mach. Intell., 9:523-531, 1987

[5] J. Ens and P. Lawrence, An investigation of methods for determining depth from focus, IEEE Trans. Pattern Anal. Mach. Intell., 15:97-108, 1993

[5] P. Favaro and S. Soatto, Learning depth from defocus, Proc. IEEE European Conference on Computer Vision, 2002

[6] Meer, P. and Weiss, I., Smoothed differentiation filters for images, Proceedings., 10th International Conference on Pattern Recognition, 1990